This paper is a discussion of the methods used in Examining the Social Media Echo Chamber (Knight, 2017, in progress) and in the research discussed at http://www.meganknight.uk/journalism/social-media-research-project/ and http://www.meganknight.uk/researc/social-media-research-methods/. The paper is intended to highlight and open for discussion the issues surrounding social media research and its place in current social research.

The importance of researching news on social media

I was sitting in a panel discussion in July of 2016, listening to a series of papers on the media’s coverage of the 2016 UK Referendum on membership in the EU (Brexit) and the role this had played in the result, which was a surprise to many people (apparently even to the people who had campaigned for it). As is typical of media analysis, especially in the UK, discussion focused on newspapers and broadcast media, with a few mentions of Twitter. The researchers had all focused on print newspapers, not on online news sources, and none had considered how news content was targeted and shared through social media, when discussing how it had promoted and responded to Brexit. In fairness, the wider publication (Jackson et al., 2016), did include discussion of social media, but not of social media as the locus of news media – journalism and social media were discussed entirely separately. .

Social media has been extensively researched since 2005, and as with all new media, the research has gone through phases, First, there is description and evangelising (Gant, 2007; Gillmor, 2006; Weinberger, 2007) – the focus here is on explaining the new medium and arguing for how it will change everything. The second phase is analytic, examining the new medium in detail, and comparing it with old media – (Knight, 2013, 2012; Knight and Cook, 2013). The final phase is normalisation, in which the new medium is simply absorbed into all discussions of media, and its place is assured as simply one of many media.

New media and social media should be moving into this phase, based on the overall usage and penetration of these forms of media (Gottfried and Shearer, 2016), but the rapid expansion of social media into the public sphere has left many researchers playing catch-up with a technology that is moving faster than the academy can track it, and social media (and new media) present many specific technological challenges to conducting research into its content.

Most researchers conducting content analysis into the news media either collect physical examples, or use one of the standardised archives of news content (for newspapers this is usually Nexis, which archives the textual content of thousands of newspapers worldwide, and is readily accessible to most academic researchers). Broadcast media is more complicated, requiring the setting up of recording of broadcast shows, but still technologically straightforward. (Berger, 2011; Löffelholz and Weaver, 2008). Social media research methods are neither standardised, nor technologically straightforward and this presents specific challenges.

To start with, there is the problem of boundaries – how does one determine what social media content is news, and what is not? This is a more nuanced discussion than this paper has scope to consider, but it ties in to the fundamental collapse of professional boundaries which is the hallmark of the new and social media age (Gant, 2007; Knight and Cook, 2013). The second challenge is technological – how do you access and store social media content. Research requires that content be fixed and accessible, in order to allow for repeated viewings and analysis, and social media is by its nature fleeting and impermanent.

Social media sites allow for public viewing of content, but control the platform through which the content is viewed, and seldom allows for storage of content for later consumption or analysis. Social media companies grant more extensive access to the platform through an application programming interface (API) which allows for software tools to be written which can access and download the content for analysis. Different companies offer different facilities through their API, and many of them control access or charge for it, considering access to the raw and customisable data feed of social media as economic product.

The API is a fairly simple tool to use, but few media researchers have any programming skills. It will take a generation before knowledge of programming languages and the ability to write applications to access and analyse data becomes standard within media studies, and this makes researching social media more expensive and time-consuming than analysing more traditional forms. However, this is a problem, given that, increasingly, the news media is on social media, and for researchers who are interested in how the public use, view and engage with the news, social media research skills are fundamental.

Researching social media: the basics: beyond Twitter

Social media is generally accessed through a combination of search and the API, which allows for download and storage of the results of those search functions. Twitter has the most public search (most content is publicly viewable and open to search) and the most publicly accessible API of the main social media sites. Twitter allows for any user to use the API to access and store content, up to seven days prior to the date of search, and with a limit of several thousand (the limits vary according to load and are not fixed) (Twitter, n.d.). Because of this, several fairly simple tools are available to allow researchers to access and store data, such as Martin Hawksey’s TAGS service (Hawksey, 2013), and because of the accessibility both of Twitter content and the tools to store it, Twitter is by far the most researched social medium.

However, Twitter is not the most accessed medium for news content – the winner there is clearly Facebook. In 2016, 44% of US adults got some or all of their news and current affairs information through Facebook, and the number is increasing (Gottfried and Shearer, 2016) and only nine percent did the same for Twitter. Facebook is clearly where the researchers should be looking to understand news media consumption and content.

But Facebook is a more closed system. Twitter is a fairly simple structure – there are users, who post tweets, which can be reposted (retweeted), responded to, or favourited by other users. Tweets can be searched by content, or by simple metadata (user, location, language, date, links or media). All users and posts are by default publicly accessible (users can send private messages, and can limit access to an account’s content, but only by actively choosing to do so). Facebook is far more complicated. There are individual users, and services (pages or apps) which also provide content. Content can be posted, shared, liked, commented on and reshared, and access to content requires the reader to have the prior permission of the person/organisation who posted it. Most individual users’ content is only viewable by people who have a confirmed link with the user (“friends”). Most services’ content is publicly viewable.

Users see content that is based on users they are friends with, and services they have effectively subscribed too (by “liking” the service), but the content they see is controlled by Facebook’s algorithm, which selects from the possible content a user might see, and orders it in a combination of currency, popularity, similarity with content the user has previously engaged with, and other factors. The exact algorithm is secret, and Facebook does not reveal much about it, or how it works (Bakshy et al., 2015; Somaiya, 2014).

Access tools – the API

Facebook does have a public API, which can be used to access and download public content, and content the user already has access to. The API is more complicated than Twitter’s, because the content is more complicated, and has more layers of engagement, detail and permissions. Facebook’s API is mostly provided as a service for people who want to develop applications and games that will run on Facebook, garnering users and their information along the way, and this is a service that Facebook expects one to pay for, which makes it more complicated to access for researchers. Facebook also has extensive analytical tools, which are provided to service users who have applications or pages – they are very useful for accessing data about one’s own audience, but less useful for researchers. (Facebook, 2017)

A public research tool, Facepager, was developed by MIT in 2012. It is freely available and will download and store data in a reasonably accessible way, within the limits of the API. It does not allow you to see any data that is not publicly available, but is useful for analysing user engagement on public pages. It requires considerable awareness of data formats and the structure of the Facebook Graph API, and would not be easily understood by a researcher without a strong technology background. (Strohne, 2017)

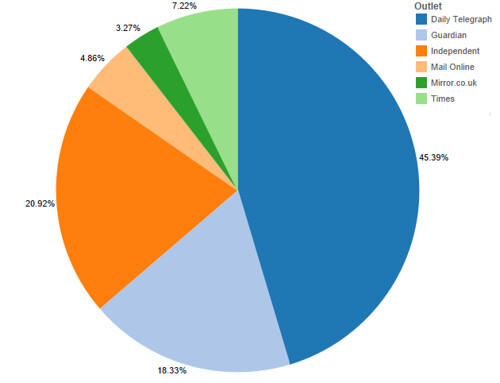

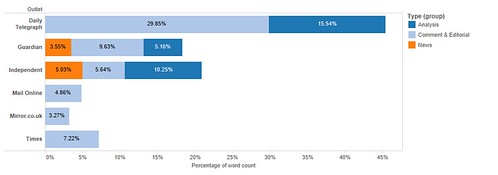

For example, a simple Facepager research of the most recent 50 posts on each of the main UK news organisations reveals some interesting and useful insights. All sites were posting an average of 25 stories per day, with the exception of the Daily Express which had only 12 per day. By far the most popular news site, by count of “shares” was The Independent – its fifty stories were shared 17500 times. The Guardian was second with 10 600 shares and the Daily Mail a distant third with 4722 shares. The most popular stories on each service were:

| Daily Express | The man who wants to be our prime minister ladies and gentlemen |

| Daily Mail | The Hollywood legend appeared in good spirits as he took a stroll through Beverly Hills on Friday |

| Daily Mirror | She’d already had a dress specially-made when she found out she couldn’t go |

| The Guardian | Can you still remember your landline number? Did you have a Hotmail account? Did you ever make a mix tape for someone you fancied? If so, you might be a xennial. Take our quiz to find out. |

| The Independent | And an end to austerity |

| The Sun | Low of bullets, this heroic group of soldiers decided to ‘go out fighting’ – with their BARE HANDS… |

| The Telegraph | “There was something deeply emotional about Collins returning against the odds.” |

| The Times and The Sunday Times | Resham Khan was injured in the 84th acid attack in London within six months |

Which would indicate a strong interest in entertainment, sport and trivial news: something that is in line with popular perceptions of Facebook’s impact on news and civic society.

But the most shared stories overall were:

| The Independent | And an end to austerity |

| The Independent | Intriguing |

| The Guardian | Can you still remember your landline number? Did you have a Hotmail account? Did you ever make a mix tape for someone you fancied? If so, you might be a xennial. Take our quiz to find out. |

| The Independent | America, 2017 |

| The Guardian | Barack Obama: “If people do not show respect and tolerance, eventually you have war and conflict. Sooner or later societies break down.” |

| The Independent | Burma denies genocide claims |

| The Guardian | “We know that MDMA works really well in helping people who have suffered trauma and it helps to build empathy. Many of my patients who are alcoholics have suffered some sort of trauma in their past and this plays a role in their addiction.” |

| The Guardian | “The love I feel for my two eldest daughters, in their 20s now, is undiminished with the passing of time. I don’t get to express it so much, and they don’t feel the need to. Yet when I look at them sometimes, I feel exactly the same emotion I felt when they were barely walking, and helpless.” |

Which is more hopeful, in that it contains considerably more hard news.

More detailed analysis would give the number of comments per story, and even gives the identities of those who comment. There is considerable data available here, and considerable scope for further research.

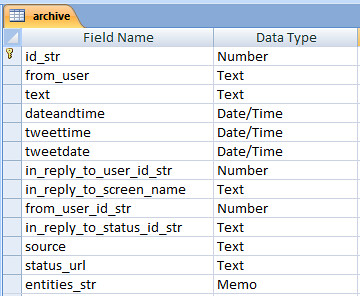

But, if the researcher wants to access other users’ data (ie, to see what other people see and respond to, the researcher will need to develop an application that runs on the web, is subscribed to by users and is cleared by Facebook’s App Review process. This requires considerable web programming knowledge and access to a web server off of which to run the application. In my own case, I use PHP and export the data to MySQL, which then allows me to use standard database tools to analyse it.

The process uses the Facebook Graph API, which gives data about a user, including:

- user_hometown

- user_religion_politics

- user_likes

- user_status

- user_about_me

- user_location

- user_tagged_places

- user_birthday

- user_photos

- user_videos

- user_education_history

- user_posts

- user_website

- user_friends

- user_relationship_details

- user_work_history

- user_games_activity

- user_relationships

All of these pieces of information require the explicit permission of the user, which is obtained through the application install interface. The basic creation of an app on the system and its install by the end user gives the researcher access to the user’s name, public profile (user_about_me) and list of friends. All other information requires the application to go through the Facebook app approval process, and to justify the use of the data. This is not onerous, although it assumes that you are a commercial user, and is rather opaque. There is no clear access for researchers, or evidence of the importance of research.

The API is extremely limited, however. It does not allow you to see the user’s “feed”, the list of content the user sees, only to access content the user has posted, shared, or applications/pages they have followed. It also only allows you to access the most recent 25 of each of those items. As such, although it shows some evidence of engagement with content, it does not show the full nature of how the user experiences Facebook, and for news researchers, does not give the full picture of a user’s engagement with news by showing articles they have clicked on, read, or even seen in their feed.

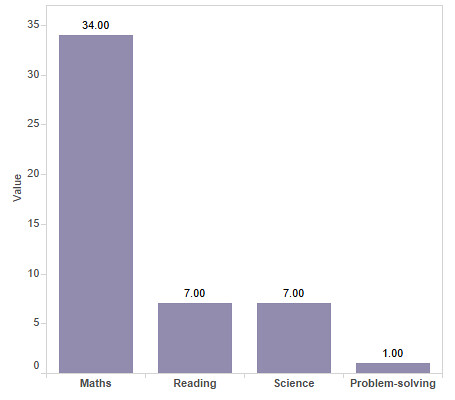

In my most recent research, a corpus of 92 users was generated (mostly university students), and preliminary findings indicate that only 4% of the content followed on Facebook is explicitly news content, and only 10% of it is explicitly civic-minded (social and political campaigns, or news content). (Knight, 2017)

Although the tools Facebook already provides are useful, and open up considerable research for those with the skills and expertise to use them, there remains a significant gap in the access researchers need in order to adequately consider the impact the service is having on civic society. The “Facebook algorithm” and the subsequent “echo chamber” it has created, has become something of a mythical beast in the public sphere. To date, there has been one published paper on the subject, which analysed the extent to which users’ feeds limited their exposure to points of view which with they disagreed. Bakshy et al’s paper found that users were less exposed to content that conflicted with their stated political affiliation (political viewpoint is a field in the Facebook profile), and less likely to click on or share a link that they disagree with. (Bakshy et al., 2015). Eytan Bakshy worked at Facebook and had unique levels of access to the raw data, something no researcher has had since. As Facebook becomes increasingly important in the civic sphere, it becomes more and more essential that researchers be given access to the full corpus of data, in order to adequately assess the impact of this increasingly dominant media company.

Ethical concerns

Social media is widely perceived as private communication by its users. Facebook, especially, is viewed as private, and not something that random members of the public should be able to see. Researching social media has the tendency to trigger concerns about the ethics of looking at people’s social media content, as though it were truly private.

In the case of Twitter, there is now considerable awareness of the public nature of the service, and in several countries there is legal precedent that recognises Twitter posts as legally the same as any other public speech, which renders ethical concerns largely moot.

Facebook is more complicated – public content is common, but it is not clear to what extent users are aware that their posts are public, despite Facebook giving users considerable control over their own privacy settings. In addition, Facebook makes a large number of interactions with public pages public, so in my corpus of news articles mentioned above, I have the Facebook names of everyone who commented on any of the stories in the corpus. Logically, this makes sense, but I suspect that if I collated those comments and contacted their authors for additional commentary, they would be surprised, and a fair number would feel that I had invaded their privacy. This creates a problem for researchers – ethical guidelines require that people not be observed without their knowledge and consent, but how do you get consent of someone who has posted publicly, but thinks they are in private?

When an application is created using the Facebook API, the user is prompted to allow the application to access their content, and because this prompt is generated by Facebook, not the researcher, there can be no deception. However, within the corpus of data that can be extracted from the feed are names and potentially identifying details of friends of the person who consented. In my corpus of data there are multiple posts that reference things like drug taking with named friends: although the names of the posters are stripped out (a requirement of the research approval, Facebook has no problem with my knowing the names of people who participated), it would be fairly easy for me to identify the poster and their friends.

Facebook’s permissions are, in fact, considerably less strict than research ethics guidelines would normally find acceptable, since they are designed to maximise revenue from advertising (data about their users is what Facebook sells, and the more detailed and specific that data is, the more lucrative it is), leaving academics to construct their own guidelines and norms within the practice.

Further questions

This is not intended as a comprehensive paper, but as a starting point for discussion and considerations for the development of methods, guidelines and tools for researching Facebook’s impact on the news. A few considerations:

- Development of public tools for Facebook research. Facepager is open source, and could be developed further, with the right skills/tools. It is not clear what MIT’s plans for it are, but it is built on an older version of the API, and is likely to stop working unless updated.

- Petitioning Facebook for additional access for researchers. Facebook can be responsive and helpful in many cases, and it might be possible to approach them with a view to developing a more open version of the API for researchers with bona fides?

- Development of sandbox and black box research tools?

References:

Bakshy, E., Messing, S., Adamic, L.A., 2015. Exposure to ideologically diverse news and opinion on Facebook. Science 348, 1130–1132. doi:10.1126/science.aaa1160

Berger, A., 2011. Media and communication research methods : an introduction to qualitative and quantitative approaches, 2nd ed. ed. SAGE Publications, Thousand Oaks.

Facebook, 2017. Facebook for Developers [WWW Document]. Facebook Dev. URL https://developers.facebook.com/products/ (accessed 7.2.17).

Gant, S., 2007. We’re all journalists now : the transformation of the press and reshaping of the law in the Internet age, 1st Free Press hardcover ed. ed. Free Press, New York.

Gillmor, D., 2006. We the media : grassroots journalism by the people, for the people, Pbk. ed. ed. O’Reilly, Beijing ;;Sebastopol CA.

Gottfried, J., Shearer, E., 2016. News Use Across Social Media Platforms 2016. Pew Res. Cent. Journal. Proj.

Hawksey, M., 2013. Twitter Archiving Google Spreadsheet TAGS v5. MASHe.

Jackson, D., Thorsen, E., Wring, D., 2016. EU Referendum Analysis 2016.

Knight, M., 2017. Examining the Social Media Echo Chamber. Presented at the International Association for Media and Communications Research.

Knight, M., 2013. The revolution will be facebooked, broadcast and published. doi:10.13140/RG.2.1.4948.4567

Knight, M., 2012. Journalism as usual: The use of social media as a newsgathering tool in the coverage of the Iranian elections in 2009. J. Media Pract. 13, 61–74.

Knight, M., Cook, C., 2013. Social media for journalists: principles and practice. Sage Publications, [S.l.].

Löffelholz, M., Weaver, D.H., 2008. Global journalism research : theories, methods, findings, future. Blackwell Pub., Malden, MA.

Somaiya, R., 2014. How Facebook Is Changing the Way Its Users Consume Journalism. N. Y. Times.

Strohne, 2017. Facepager.

Twitter, n.d. API Overview — Twitter Developers [WWW Document]. URL https://dev.twitter.com/overview/api (accessed 7.2.17).

Weinberger, D., 2007. Everything is miscellaneous: the power of the new digital disorder. Henry Holt and Company, New York.